TL;DR

Crypt-Keeper is a simple and scalable web service for keeping file encryption credentials out-of-band to the file being protected and exchanged. This is different than many contemporary file exchange services in that the storage mechanism does not have to be trusted to keep your data secret. Crypt-Keeper leverages AWS S3 for storage in order to take advantage of nearly infinite storage capacity, ease of management, availability, and of course huge scalability.

In my last post, Distributing Secrets, I talked about some of the problems associated with internal distribution of secrets across a deployed architecture. Unfortunately this is not the only area where we run into issues with distributing secrets, often many of us have a need to share data with customers. In many of those cases the data we are sharing is of a sensitive nature, and should be protected. Of course there are very well known methods for keeping data secure in transit, for example using SSL, but this does not protect the data at rest. In the next section we’ll go over the problem, and some existing partial solutions.

The Problem

There are multiple existing solutions for sharing files. Dropbox, Google Drive, S3, Google Cloud Storage, Azure Storage, FTP or SFTP are all possibilities among many others. So, why do we need yet another solution? Let’s first look at some of the other options, then we can see why we need something new to augment the available feature set. We’ll see that each option suffers from some combination of the following problems: P1) locality of compute resources in the architecture, P2) the need to trust the infrastructure to not snoop on encryption keys, or P3) lack of infrastructure or service to maintain encrypted files or shared key materials.

Off the shelf solutions

Dropbox is primarily focused on syncing user data across machines, and user to user sharing, but more recently they have an enterprise offering. Of course there is programmatic access and this would certainly be a possible solution to file exchange save a few issues. First, Dropbox does not offer compute services, so my processing infrastructure would still need to pull a file from the non-local network storage before completing it’s task, AKA P1 above. Second, I need to trust Dropbox that they really do encrypt my data and pinky promise not to use those keys to snoop on me, P2. Third, I could encrypt everything before it goes in Dropbox, but then I still need to maintain those key materials somewhere, so P3.

Google Drive is fairly similar to Dropbox, but Google does offer some compute services. I don’t believe Google Drive is being marketed at enterprise scale at this point, but I’ve seen some enterprising small businesses attempt to use it for sharing files internally. Hypothetically I could use Google compute service, so that problem is solved. I’m not judging those that have ventured out to alternative cloud providers, but honestly when you say cloud I – and probably 90% of the rest of the industry – immediately think AWS with Azure as a close second. I don’t think it’s just me as this Gartner report shows similar results. So, the reality is I’m probably not using Compute Engine. Even if not I still either need to trust Google, or encrypt my own docs. So, Google drive suffers from P2 and P3. Note that by my reading of the terms of service Google is not even pretending not to snoop on your data.

S3 is more of a building block than either Dropbox, or Google Drive. You basically get a RESTful interface to abstract storage containers with a few different selections of SLA, and a very long track record of meeting or beating those SLAs at what I think is a great price. Basically, if you are in a situation where S3 can work for your data needs you will probably do the math and find it is the best option. Now if you should find yourself in a position where S3 doesn’t work for your needs and you can’t point to a legal requirement or fiduciary duty that tells you why then you need to question the direction of your business. If you’ve done the math and concluded that it is cheaper to build your own datacenter, then you are wrong. Seriously, you forgot to include something in your math. That said S3 has the same problem as previous systems. I either trust Amazon not to use my keys, or I encrypt before transferring documents. Here, again S3 has problems P2 and P3.

Google Cloud Storage is the Google equivalent to Amazon S3, part of Google’s Cloud Platform. They offer several different SLAs, and an API to the service. I do not personally have any experience using GCS, but I do know there are several enterprises that do use the Google platform. GCS suffers from P2 and P3 just as the other services mentioned here.

Azure storage is a little bit more complicated model than S3 but does offer competitive options. I had the opportunity to chat with a senior engineer on the Azure storage team some months back and I have to say I’m impressed with the technology and the direction. That said Microsoft is quickly recovering from but still affected by years of very poor management. However, I have ZERO experience with production on Azure and I can’t imagine at the moment when that would change. To my knowledge Azure storage suffers from the same issue as the other systems reviewed here in that I either need to trust Azure or encrypt my own docs. Starting to see a pattern Azure suffers from P2 and P3.

Role your own solutions

I’m sure there are other options, but for most situations if I’m exchanging documents with a customer my service will be based on some form of FTP, SFTP, or I’m going to skip the intermediary and use S3 since for many companies that is the ultimate destination for data anyway. Given that I’ll try to explain why I think FTP and SFTP are not the right solutions.

Let’s get specific about what I mean by FTP. FTP has been around for a long time, so there are plenty of variations to choose from. RFC 114 was introduced in 1971 well before the TCP/IP stack was even the standard for the Internet, this is only of historical note today as it is replaced by the RFC 765/959 draft and standard. RFC 765 and RFC 959 update the protocol for use on TCP/IP. RFC 1579 introduces passive mode. RFC 4217 defines the use of FTP over TLS, but note this was not an official standard until October 2005. So basically when I say FTP I mean any and all of these standards including what is typically called FTP, FTPS, FTP(ES), FTP over SSL, or FTP with TLS. There might be other names but we are basically looking at a control pipe and data pipe either of which may or may not be secured. That’s FTP. Obviously I can deploy my FTP service in a way that solves P1, but nothing about FTP addresses P2 or P3 in any way.

Now, SFTP refers only to SSH File Transfer Protocol. Despite the similarity of the name this is not FTP. It is a subprotocol of the SSH or Secure SHell protocol. It offers the same level of transport layer security that SSH does, and that is all. We end up in a similar situation with SFTP as we are with FTP. We can easily solve the P1 problem depending on how we deploy services, but there is no builtin solution to either P2 or P3.

Why none of the above really work

Now that we’ve established what the players are, I’ll tell you I would not use either FTPS or SFTP. The reasons are simple. First, they suffer from the same issue as the third party services mentioned above in terms of P3. They can secure the transport of the data, but don’t really help with the encrypted storage P2 problem. Second, I don’t think they are easily secured, scaled or managed. For example, it is possible to configure an FTP server so that it allows non-secure transmission of files based on choices the client makes. Third, I’m already going to be running HTTPS somewhere if I support any websites or services. Since I’m already putting effort into securing, scaling and managing HTTPS then I’m going to use it. Now since I’m using HTTPS anyway I might as well use the service someone else has already created for storage and just move the whole thing to S3. Problem solved, except that remember no matter what protocol I use I still had issues with storing the file unencrypted and managing keys, or what I’ve been calling P2 and P3. Enter Crypt-Keeper.

Crypt-Keeper solves the problem of storing a file at rest using a secure encryption key that I can easily share with any other Crypt-Keeper user on my own trusted infrastructure. It does this leveraging the security and scalability of S3, but without completely trusting the S3 or AWS platform.

The Solution – Crypt-Keeper

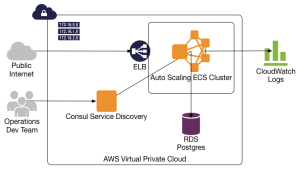

Crypt-Keeper is a reference implementation of the Secure Document Service (SDS) implemented as a Python client, and Django web application. The Python client exposes a Python native library for simple programmatic access to Crypt-Keeper, and has a command-line interface for scripting simple file transfers. The Django web application features a management interface for the service, and a simple RESTful service interface available to use with any client that can effectively use HTTP protocols.

The SDS protocol is so simple you can execute the three necessary use cases using a client like curl, or Paw. To upload a file you POST metadata about the file to the SDS file upload API endpoint. You get back information including a document ID, encryption key, encryption type, and a signed S3 URL to PUT the file. If you use the Python client it will automatically encrypt and stream the file to S3. You can throw out the encryption key when you are done, the download endpoint will retrieve your key when needed as long as you have the document ID. Next, you can use the SDS file share API endpoint to assign access privileges to another Crypt-Keeper user. Finally, you or any users that have access rights and the document ID can download the file using the SDS file download endpoint to retrieve document metadata, encryption information, and a temporary signed S3 download URL. Of course using the Crypt-Keeper Python client is even easier since it grabs all the information needed from the SDS API endpoints, applies the correct encryption to your file, and uploads or downloads from S3.

One of the really great things about the SDS protocol is it is nearly infinitely scalable. There are two limitations to scale: the storage system which is basically S3 with S3’s limitations, and the datastore for SDS. In Crypt-Keeper we use a traditional SQL based backend like MySQL or Postgres. However, if the datastore were replaced with Dynamo you could scale much higher than for a traditional DB. So, why didn’t I use Dynamo in Crypt-Keeper? I want to be able to use Crypt-Keeper on less expensive infrastructure, I want to be able to run the service outside of AWS, and I wanted to get the project to a workable state with 2 to 3 months of my spare time at about 5 hours a week.

So that is Crypt-Keeper. I think it is an elegant solution to a difficult problem, with quite a bit of potential. In the next section I’ll talk a bit about the limitations and future plans.

Limitations and Future Plans

Currently, there are few known limitations to Crypt-Keeper. Files larger than 5 gigabytes are not supported as we are not using multipart upload. Amazon recommends using multipart uploads for any file larger than 100 megabytes, so this is a change that I’d like to work in soon to support larger file sizes. Initial analysis on this problem indicates that the changes to Crypt-Keeper will be backwards compatible with the initial release of Crypt-Keeper. I delayed the implementation because I want to get the code out in the wild sooner, and just don’t have the time right now to invest. This will likely be one of the first additional features I’ll add in the near future, based on bugs taking priority, and demand for larger file sizes.

There are some known bugs in the client. The command line interface does not report failures in a smooth fashion at present. Additional testing is needed here. Running nosetests with coverage on the client package currently reports 98% coverage, but as always numbers don’t always tell the full story. For example, there are currently no tests on the command parser, and nosetests completely ignores that file in coverage reports. Perhaps this is user error, but sadly I don’t think it is. I’ve turned on the cover inclusive options etc., but with no change in output. My experience with coverage tools in other languages is that they report inclusively by default, and exclude by configuration. Long story short I didn’t really discover until recently that I had not implemented any tests on the command line processor, and there are definitely issues in that module. No excuses here, I was looking at total coverage and feeling cocky in the high nineties, even experienced engineers get to learn something every once in a while.

Discovering a failing in my tool chain, or at least my usage of the test coverage data led me to look deeper into the service test coverage. At this point the only aspects of the service that have zero test coverage are the management commands that run to configure the service but are not part of the critical path. As it stands today the service is at about 75% coverage, but I’m a bit more confident in that number than with the client as I’ve spent quite a bit more time discovering bugs and coding tests on the service than on the client. Also, while the client tests are unit tests only, the tests on Django setup a test SQLite DB and actually perform end to end integration minus a real production DB. So, while 75% is less than 98% that 75% is focused on the critical path of the service. Obviously all these numbers are subject to change as time moves on and the Github repo, running the coverage reports for yourself are the best whys to determine current status.

Another thing I’d like to add is an easy to deploy Docker container. I’ve obviously – see previous blog posts – done some work with Docker before, but given the sensitive nature of this program I want to be sure that any official container is secure. I still have more analysis to do on the reference Vagrant machine that is part of the distribution of Crypt-Keeper. The goal with the vagrant install is to PoC what a secure production environment might look like. I look forward to community feedback on those aspects.

Conclusion

I had a great time creating Crypt-Keeper. I hope it is useful. I’d love feedback. What do you think? Does Crypt-Keeper solve the problem? Send PRs!

If like me you thought G5 support was dropped to soon in the Logic product line you may be happy to hear that what this means is that Apple won’t answer your questions. I’ve been running Logic 9 on my 4 year old quad G5 for about a week now. Ran some rather heavy projects and there were no issues. Though I did have to bring Logic Node into the mix once.

If like me you thought G5 support was dropped to soon in the Logic product line you may be happy to hear that what this means is that Apple won’t answer your questions. I’ve been running Logic 9 on my 4 year old quad G5 for about a week now. Ran some rather heavy projects and there were no issues. Though I did have to bring Logic Node into the mix once.