I like to do things – or at least those things I don’t really enjoy doing – fast. This is why I really appreciate enabling technologies that help me do things quickly. For example, I love Spring Boot because I can build all the boring parts of a service or web app quickly. Or at least assuming I’ve got my development environment setup correctly I can. It’s never that simple though. The new guy that just started will need three days of setting up his dev environment, so that will keep him from building anything for at least part of this sprint. Depending on how complicated the environment was when he started, and how far the environment has drifted from the docs our team wrote, maybe it takes even longer. Of course, then deploying to QA and Prod is kind of slow, and any issues mean calling the dev team. Oh, and then there is that 3 AM page because the production environment on server node 3 doesn’t quite match the other nodes.

This probably sounds familiar to a lot of developers. Just replace Spring Boot with the killer tech you prefer that makes your life easier for that brief part of your day when you actually write code instead of the ever expanding set of responsibilities that might even be called something like DevOps.

Purpose

The purpose of this article is to introduce an opinionated proof-of-concept infrastructure, architecture, and tool chain that makes the transition from zero to production deployment as fast as possible. In order to reach the widest possible audience, this infrastructure will be based on AWS. I believe if you have recently created your AWS account you can deploy the infrastructure for free, and if not it’s around $3 per day to play around with, assuming you use micro instances. The emphasis here is on speed of transition from dev to prod, so when I say opinionated I do not mean my opinion is this is the best solution for you or your problem – that depends a lot on your specific problem. I will go into more detail on the opinions expressed in this solution throughout this article. At the time of this writing, the actual infrastructure implementation is lacking certain obvious properties needed for use in a real production environment – for example there is a single Consul server node. So keep in mind this is a proof of concept, which still requires effort before using in production settings.

The Problem

I can develop a service very quickly. For example, I can generate the skeleton of a Spring Boot service using Spring Initializr, and implement even a data service very quickly. Any gains I make in development time can be lost in the deployment process. Production deployment can be arduous and slow depending on how the rest of my organization works.

Despite adoption of configuration management tools – like SaltStack – there is often times drift in production environments. This can be the result of either plastering over an existing environment of unknown state with configuration management, or lack of operational discipline by making individual changes outside configuration management. Micro-services make the situation more challenging because rather than a handful of apps with competing dependency graphs, I now have potentially dozens of services each with conflicting dependencies.

Even outside of production there are potential roadblocks. What if I need a development environment that is exposed to the public internet to test integration of my service with another cloud based service?

Docker solves many of the issues of environment drift and dependency management by packaging everything together. However, to be honest if my organization – or yours – was having a hard time getting me the resources to deploy my micro-service, I doubt introducing Docker will help with timelines in the near term. In the long run, it may be an easy sell to convince an infrastructure operations organization to stop deploying custom dependencies for each language supported, and instead create a homogeneous environment of Docker hosts where they can concentrate their efforts on standardized: configuration, log management, monitoring, analytics, alarming, and automated deployment processes. Especially so if that environment features automatic service discovery that almost completely eliminates configuration from the deployment process.

A Solution

In short, it doesn’t matter if you are trying to overcome the politics or technical debt of a broken organization, or you are bootstrapping the next Instagram. If you want to deploy micro-services this article presents a way to use force-multiplying tools to turn a credit card number into a production scalable, micro-service infrastructure as quickly as possible.

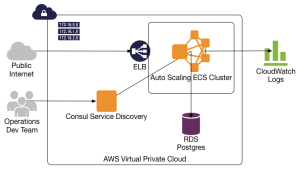

The diagram above shows an abstraction of the AWS infrastructure built below. The elastic load balancer distributes service requests across the auto-scaling group of EC2 instances, which are configured as EC2 Container Services (ECS) running our Dockerized service. Each ECS instance includes a Consul agent and Registrator to handle service discovery and automatic registration. Database services are provided by an RDS instance. Consul server is deployed to a standard EC2 instance – this will expand to several instances in the production version. All of this is deployed in a virtual private cloud (VPC) and can scale across multiple availability zones.

Prerequisites

- git

- brew – or a package manager of your choice. Install with:

/usr/bin/ruby -e "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/master/install)"or check the homepage for updated instructions.

- Virtual Box – or some other VM supported by Vagrant/Otto. For example, install with:

brew tap caskroom/cask && brew install Caskroom/cask/virtualbox - An AWS account.

Setting up your Development Environment

For our development environment we’ll be using Otto. Otto is a tool that builds management of dependencies and environment setup on top of several other tools including Vagrant, Terraform, Consul, and Packer. As of the time of this writing, it is very early in development so potential for using in prod deployment is limited – especially for Java. However, for the dev environment we get some extra freebies not included by just using Vagrant – namely service discovery and automatic deployments of dependencies.

- We’ll begin by installing otto:

brew install ottootto will automatically install it’s dependencies on an as-needed basis.

- Next we’ll create or clone the project we want to work with. For the purposes of this demo, I’ve created a Spring Boot based micro-services hello world project that connects to a PostgreSQL database and a Redis instance in order to complete it’s work. We use Spring JPA connecting to PostgreSQL to track the number of calls for each user, and we use Redis to track the number of calls in a session. The goal of this contrived hello world is simply to have multiple service dependencies to better reflect a real world service.

To clone the existing project execute:mkdir -p ~/source cd ~/source git clone https://github.com/mauricecarey/micro-services-hello-world-sb.git cd micro-services-hello-world-sb - We need to define our dependencies using Otto’s Appfiles. You can see the Appfiles I’ve defined for postgres and redis on GitHub. We just need to include these as dependencies in the next step.

- We need to define an Appfile config for our service in order to declare the needed dependencies.

cat <<EOF > ~/source/micro-services-hello-world-sb/Appfile application { name = "micro-services-hello-world-sb" type = "java" dependency { source = "github.com/mauricecarey/otto-examples/micro-services-hello-world-sb/postgres" } dependency { source = "github.com/mauricecarey/otto-examples/micro-services-hello-world-sb/redis" } } EOFThis file provides a name for our application, explicitly sets the type to java, and declares the dependencies.

- We will compile the environment setup, start the dev environment, and test our service.

Execute the following to compile and start the otto environment:otto compile otto devIf you don’t have vagrant installed, otto will ask you to install when running

otto dev. Keep in mind a lot is happening in this step including: potential Vagrant install, potential Vagrant box download, downloads for Docker images, and installing dev tools. If you halt the environment when finished – versus destroy – restarts will only take a few seconds. Once the environment is finished building you can login with:otto dev sshNow we can build and run the application:

mvn package mvn spring-boot:runOpen a new terminal then:

cd ~/source/micro-services-hello-world-sb otto dev ssh curl -i localhost:8080/healthYou should see HTTP headers for the response plus JSON similar to:

{ "status": "UP", "diskSpace": { "status": "UP", "total": 499099262976, "free": 314164527104, "threshold": 10485760 }, "redis": { "status": "UP", "version": "3.0.7" }, "db": { "status": "UP", "database": "PostgreSQL", "hello": 1 } }This means the service is healthy. Now in the same terminal hit the service:

curl -i localhost:8080/greetingYou should see:

TTP/1.1 200 OK Server: Apache-Coyote/1.1 X-Application-Context: application x-auth-token: 2ec567a0-4697-4b1c-a82b-fd99b021e87b Content-Type: application/json;charset=UTF-8 Transfer-Encoding: chunked Date: Mon, 29 Feb 2016 22:54:03 GMT {"id":1,"sessionCount":1,"count":1,"content":"Hello, World!"}Now you can hit the service again with the given token:

curl -i -H "x-auth-token: 2ec567a0-4697-4b1c-a82b-fd99b021e87b" localhost:8080/greetingYou should see:

HTTP/1.1 200 OK Server: Apache-Coyote/1.1 X-Application-Context: application Content-Type: application/json;charset=UTF-8 Transfer-Encoding: chunked Date: Mon, 29 Feb 2016 22:57:44 GMT {"id":1,"sessionCount":2,"count":2,"content":"Hello, World!"}I’ll leave it as an exercise to check that session count resets to zero only after a session timeout, new session, or redis restart. You can provide a name as well. For example,

curl -i localhost:8080/greeting?name=test. - Now we need to install and configure the AWS tools to complete our development environment setup:

sudo apt-get install -y python-pip sudo pip install awscli aws configure

Dockerizing the Service and Distributing the Image

Since we used otto to build our dev environment, we already have Docker installed, and we can jump right in to building Docker images for our service.

- First we need to define a Dockerfile to build a Docker image for the service. Open a new terminal or exit the otto environment and paste the following:

cat <<EOF > ~/source/micro-services-hello-world-sb/Dockerfile FROM mmcarey/ubuntu-java:latest MAINTAINER "maurice@mauricecarey.com" WORKDIR /app ADD target/microservice-hello-world.jar /app/microservice-hello-world.jar EXPOSE 8080 CMD ["/usr/bin/java", "-jar", "/app/microservice-hello-world.jar"] EOF - Now we copy the fat jar for our micro-service to

target/microservice-hello-world.jar.cp ~/source/micro-services-hello-world-sb/target/microservice-hello-world-0.0.1-SNAPSHOT.jar \ ~/source/micro-services-hello-world-sb/target/microservice-hello-world.jar - We can build our Docker images with (back in our Otto dev environment):

docker build --tag hello-world . - We can now run the container with:

docker run -d -p 8080:8080 --dns=$(dig +short consul.service.consul) --name hello-world-run hello-worldThis sets the dns resolver for the container to the consul instance running in our dev environment. We map port 8080 of the dev environment to 8080 of the container. Our container will be named

hello-world-runfor convenience. - We can test the connection to our container using curl:

curl -i localhost:8080/healthWe can check out the logs from our service with:

docker logs hello-world-run - Next we will build the image for AWS, create an AWS ECR, and push the Docker image to our new repo. We do this using a script built for that purpose.

git clone https://github.com/mauricecarey/docker-scripts.git export AWS_ACCOUNT_NUM=<YOUR ACCOUNT NUMBER> AWS_REGION=us-east-1 REPO_NAME=hello-world IMAGE_VERSION=0.0.1 DOCKER_FILE=Dockerfile \ ./docker-scripts/docker-aws-build.sh

At this point we have built and tested a Dockerized version of our service locally. We have pushed the Docker image to AWS. As you will see later, because we are using Consul for service discovery we will not make any changes to the Docker image, or have to add any additional configuration to deploy on AWS.

Building an Infrastructure on AWS

To setup the AWS stack, we will use CloudFormation to create a stack.

- Check out aws-templates or just grab the full-stack.json file.

cd ~/source/micro-services-hello-world-sb git clone https://github.com/mauricecarey/aws-templates.git - Goto the cloud formation AWS console in your browser and create a new stack using the

~/source/micro-services-hello-world-sb/aws-templates/full-stack.jsonfile as a template. - Most of the default parameters should work but make sure you set the database name(hello), user(hello), and password(Passw0rd) as they are set in the application properties of the app. Note: Using the default micro instances with this template you will create four micro EC2 instances, one micro RDS instance, and an ELB. At the time of this writing cost was roughly $80 per month not including data transfer. You should follow the estimated cost link at the top of the cloud formation wizard’s final page before clicking on create to estimate actual costs for you.

Once the stack is up and running, switch to the outputs tab. Here you will find some useful parameters for the remainder of this article. You should set the following environment variables using the name of the ECS cluster and the ELB URL:

export AWS_ECS_CLUSTER_NAME=<ECS Cluster Name>

export AWS_ELB_NAME=<Elastic Load Balancer Name>

export AWS_ELB_URL=<Elastic Load Balancer URL>

The load balancer url will look like STACKNAME-EcsLoadBalan-AAAAAAAAAAAAA-XXXXXXXXX.REGION.elb.amazonaws.com. The STACKNAME-EcsLoadBalan-AAAAAAAAAAAAA portion is the part you use to define AWS_ELB_NAME above.

Deploying to AWS

With our cloud formation stack running, and all our AWS CLI tools installed on our development environment it is now a simple process to define the services we want to run on the ECS cluster.

- We can register our task definitions for both redis and our hello world micro-service with ECS:

aws --region $AWS_REGION ecs register-task-definition --cli-input-json file://aws-templates/redis-task.json aws --region $AWS_REGION ecs register-task-definition \ --cli-input-json file://aws-templates/micro-services-hello-world-sb-task.json - Now that we have our task definitions registered with ECS we can start the services:

aws --region $AWS_REGION ecs create-service --cluster $AWS_ECS_CLUSTER_NAME --service-name redis-service \ --task-definition redis --desired-count 1 aws --region $AWS_REGION ecs create-service --cluster $AWS_ECS_CLUSTER_NAME \ --service-name micro-services-hello-world-service \ --task-definition micro-services-hello-world-sb --desired-count 3 --role ecsServiceRole \ --load-balancers loadBalancerName=$AWS_ELB_NAME,containerName=hello-world,containerPort=8080 - Check that the service is up:

curl -i http://$AWS_ELB_URL/health - Call the service:

curl -i http://$AWS_ELB_URL/greetingYou can perform the same calls we did locally to confirm the service is working properly.

Next Steps

Basically, in this section I’d like to try to answer questions about what is ready for production use and what is not. I’ll also mention what I’m continuing to work on.

Otto

Otto is definitely not ready for production use in creating production environments at this point, but of course we didn’t try to use this feature here. That opinion is primarily based on my experience trying to use it for Java. For other languages, assuming it is actually capable of creating a production environment, you would need to evaluate that environment with respect to your standards and needs.

The portion of Otto we used in this article is not on the traditional production critical path. As such, evaluation for adoption is a bit different. If we are talking about experienced developers they should be able to pick it up quickly to respond to any issues they might encounter on their machine. Otto utilizes Vagrant for much of the heavy lifting on the development environment and as such has been very stable in this regard. There are currently enough advantages to the dependency definitions and automatic service discovery setup to convince me to adopt Otto now for development use. I’m currently working on picking up Go as quickly as possible so I can help fix any issues I encounter.

Docker

Docker is based on Linux containers, which have been around for awhile now. Take a look at who’s using Docker in production today. Then forget that – mostly. Appeal to authority is my least favorite argument for adopting software or technology. You’ll obviously have to decide for yourself if you are ready to commit to using Docker, but if you are working in a micro-service architecture you need to consider what happens with downstream dependencies – including libraries or language VMs – for those services as they mature.

A few reasons I’m sold on Docker include:

- Standardized deployment packaging across environments from development to production.

- The ability to have services dependencies update at a different pace while sharing deployment environments.

- Potential for higher equipment utilization leading to reduced costs.

A few reasons not to use Docker:

- Fear of change.

- You hate yourself.

AWS Infrastructure Template

The AWS infrastructure I used here still needs some work. As I mentioned previously it’s not ready for production, here are some reasons why:

- Not truly multi-AZ,

- Not multi-region – I have not started considering this at this point,

- Logging is not fully configured to make best use of AWS – ideally should use something like fluentd,

- Needs a security audit – I’m not an expert in this area,

- Needs additional configuration and redundancy for ELB to ensure availability,

- Monitoring and alerting need to be defined for the infrastructure components – like Consul,

- The Consul server really should be three servers spread across two AZs at a minimum,

- Needs auto-scaling rules,

- Other things that I haven’t spotted yet – needs peer review.

Additionally, maybe a cloud formation script is not the right answer – perhaps Terraform integrated into Otto as a production deployment target could be. I plan to continue to improve this, but this is certainly an area where more eyes will be better so send me PRs.

Note: Unfortunately, I have seen production environments in small companies that lack many of the requirements for a production level environment I listed above, so for those reasons this may be a better fit for production than a one off internal solution since there is at least a pathway to all those requirements.

Moving Forward

I’m currently working on automating production deployment of Docker images. So, ideally I’ll have an additional write up on that soon.

We made use of simple DNS based service discovery using Consul, but we did not dive into further capabilities of Consul. In a future update I’ll go into how to store additional configuration using the key/value store in consul as well as how to move away from well-defined ports which enables packing more like-service instances on each host.

Conclusion

There are plenty of frameworks and libraries that have helped developers move quickly and deliver services faster, but even with configuration management, continuous deployment pipelines, and DevOps practices there is a gap between development and production. That gap I believe is defined by the expectations formed around delivered artifacts. Docker simplifies those delivered artifacts, and moves many former environmental dependencies into the build pipeline. Adding Consul to the mix further reduces environmental configuration hazards by allowing simple service discovery. By standardizing environments around Docker artifacts we can increase deployment velocity, and decrease risk of dependency issues in all environments. Finally, using AWS ECS to host Docker containers is a quick and easy way to get started with Docker that allows you to move from development to production very quickly.